| Title | Integration of GridFTP with Freeloader storage system |

|---|---|

| Student | Hesam Ghasemi |

| Mentor | Rajkumar Kettimuthu |

| Abstract | |

| Scientific experiments produce large volumes of data which require a cost-conscious data stores, as well as reliable data transfer mechanisms. GridFTP is a high-performance, secure, reliable data transfer protocol optimized for high-bandwidth wide-area networks. GridFTP, however, assumes the support of a high-performance parallel file system, a relatively expensive resource. FreeLoader is a storage system that aggregates idle storage space from workstations connected within a local area network to build a low-cost, yet high-performance data store. FreeLoader breaks files into chunks and distributes them across storage nodes thus enabling parallel access to files. A central manager keeps track of file meta-data as well as of the location of the chunks associated with each file. This project will integrate Globus project’s GridFTP implementation and FreeLoader to reduce the cost and increase the performance of GridFTP deployments. The integration of these two opens-source systems system will address the following main problems. First, FreeLoader storage nodes will be exposed as GridFTP data transfer processes (DTPs). Second, the assumption the current GridFTP implementation makes, namely that all data is available at all DTPs will be relaxed by integrating the GridFTP server and FreeLoader managed data location mechanisms. Finally, load balancing mechanisms will be added to the GridFTP server implementation to match FreeLoader’s ability to stripe and replicate data across multiple nodes. The fact that at a Freeloader-supported GridFTP site files are spread over multiple FreeLoader nodes (exposed as GridFTP DTPs) implies that the integration will need to orchestrate multiple connections to execute a file transfer. The current implementation of GridFTP is not able to handle cases where the number of DTPs on the receiver and sender side does not match. For instance, for a single client accessing a GridFTP server with N DTPs, the server will need manage the connections such that a single server DTP will connect to the client at a time. Once the first DTP has finished its transfer, the second server DTP will connect to the client and execute its transfer. The same mechanism can be applied to the cases where the client server node relationship is N-to-M. This mechanism has the following advantages: the load is balanced between the DTP nodes, and can support FreeLoader data layout where file chunks are stored on each DTP. The design and implementation requirements include: code changes to either system should be minimal to enable future integration with the mainstream GridFTP/FreeLoader code, GridFTP client code changes should be avoided, and the integration components should be implemented in C since both FreeLoader and GridFTP are implemented in C. | |

Tuesday, May 20, 2008

google summer code project: Integration of GridFTP with Freeloader storage system

Computational Biology On The Grid: Decoupling Computation And I/O With ParaMEDIC

Computational Biology On The Grid: Decoupling Computation And I/O With ParaMEDIC

CCT talk:

http://www-unix.mcs.anl.gov/~balaji/#publications

Abstract:

Many large-scale computational biology applications simultaneously rely on multiple resources for efficient execution. For example, such applications may require both large compute and storage resources; however, very few supercomputing centers can provide large quantities of both. Thus, data generated at the compute site oftentimes has to be moved to a remote storage site for either storage or visualization and analysis. Clearly, this is not an efficient model, especially when the two sites are distributed over a Grid. In this talk, I'll present a framework called "ParaMEDIC: Parallel Metadata Environment for Distributed I/O and Computing'' which uses application-specific semantic information to convert the generated data to orders-of-magnitude smaller metadata at the compute site, transfer the metadata to the storage site, and re-process the metadata at the storage site to regenerate the output. Specifically, ParaMEDIC trades a small amount of additional computation (in the form of data post-processing) for a potentially significant reduction in data that needs to be transferred in distributed environments. The ParaMEDIC framework allowed us to use nine different supercomputers distributed within the U.S. to sequence-search the entire microbial genome database against itself and store the one petabyte of generated data at Tokyo, Japan.

GENI - The Global Environment For Networking Innovations Project

GENI - The Global Environment For Networking Innovations Project

CCT talks: Chip Elliott, BBN Technologies And GENI PI/PD/Chief Engineer

http://www.geni.net/

Abstract:

GENI is an experimental facility called the Global Environment for Network Innovation. GENI is designed to allow experiments on a wide variety of problems in communications, networking, distributed systems, cyber-security, and networked services and applications. The emphasis is on enabling researchers to experiment with radical network designs in a way that is far more realistic than they can today. Researchers will be able to build their own new versions of the “net” or to study the “net” in ways that are not possible today. Compatibility, with the Internet is NOT required. The purpose of GENI is to give researchers the opportunity to experiment unfettered by assumptions or requirements and to support those experiments at a large scale with real user populations.

GENI is being proposed to NSF as a Major Research and Equipment Facility Construction (MREFC) project. The MREFC program is NSF’s mechanism for funding large infrastructure projects. NSF has funded MREFC projects in a variety of fields, such as the Laser Interferometer Gravitational Wave Observatory (LIGO), but GENI would be the first MREFC project initiated and designed by the computer science research community.

Friday, May 16, 2008

MOPS (Managed Object Placement Service)

MOPS (Managed Object Placement Service) is an enhancement to the Globus GridFTP server that allows you to manage some of the resources needed for the data transfer in a more efficient way.

MOPS 0.1 release includes the following:

- GFork - This is a service like inetd that listens on a TCP port and runs a configurable executable in a child process whenever a connection is made. GFork also creates bi-directional pipes between the child processes and the master service. These pipes are used for interprocess communication between the child process executables and a master process plugin. More information on GFork can be found here.

- GFork master plugin for GridFTP - This master plugin provides enhanced functionality such as dynamic backend registration for striped servers, managed system memory pools and internal data monitoring for both striped and non striped servers. More information on the GridFTP master plugin and information on how to run the Globus GridFTP server with GFork can be found here.

- Storage usage enforcement using Lotman - All data sent to a Lotman-enabled GridFTP server and written to the Lotman root directory will be managed by Lotman. Information on how to configure Lotman and run it with the Globus GridFTP server can be found here.

- Pipelining data transfer commands - GridFTP is a command response protocol. A client sends one command and then waits for a "Finished response" before sending another. Adding this overhead on a per-file basis for a large data set partitioned into many small files makes the performance suffer. Pipelining allows the client to have many outstanding, unacknowledged transfer commands at once. Instead of being forced to wait for the "Finished response" message, the client is free to send transfer commands at any time. Pipelining is enabled by using the

-ppoption withglobus-url-copy.

Wednesday, May 14, 2008

GRIDS Lab Topics Related Thesis/Dissertations World-Wide

- Daniel Colin Vanderster, Resource Allocation and Scheduling Strategies on Computational Grids, Ph.D. Thesis, University of Victoria, February 2008.

- SungJin Choi, Group-based Adaptive Scheduling Mechanism in Desktop Grid, Ph.D. Thesis, Korea University, June 2007.

- Bjorn Schnizler, Resource Allocation in the Grid : A Market Engineering Approach, Ph.D. Thesis, Karlsruhe University, 2007.

- Dang Minh Quan, A Framework For SLA-Aware Execution of Grid-Based Workflows,Ph.D. Thesis, Informatik und Mathematik der Universitaet, November 2006.

- Tummalapalli Sudhamsh Reddy, Bridging Two Grids: The SAM-Grid / LCG integration Project, Thesis of Master in Computing Science, The University of Texas, Arlington, May 2006.

- Flavia Donno, Storage Management and Access in WLHC Computing Grid, Ph.D. Thesis, University of Pisa, 2006.

- Patricia Kayser Vargas Mangan, GRAND: A Model for Hierarchical Application Management in Grid Computing Environment, Ph.D. Thesis, COPPE/Federal University of Rio de Janeiro, Brazil, March 2006.

- Anoop Rajendra, Integration of the SAM-Grid Infrastructure to the DZero Data Reprocessing Effort, Thesis of Master in Computing Science, The University of Texas, Arlington, December 2005.

- Bimal Balan, Enhancements to the SAM-Grid Infrastructure, Thesis of Master in Computing Science, The University of Texas, Arlington, December 2005.

- Tevfik Kosar, Data Placement in Widely Distributed Systems, Ph.D. Thesis, University of Wisconsin-Madison, August 2005.

- Aditya Nishandar, Grid-Fabric Interface For Job Management In Sam-Grid, A Distributed Data Handling And Job Management System For High Energy Physics Experiments, Thesis of Master in Computing Science, The University of Texas, Arlington, December 2004.

- Sankalp Jain, Abstracting the hetereogeneities of computational resources in the SAM-Grid to enable execution of high energy physics applications, Thesis of Master in Computing Science, The University of Texas, Arlington, December 2004.

- Gurmeet Singh Manku, Dipsea: A Modular Distributed Hash Table, Ph.D. Thesis, Stanford University, August 2004.

- Adriana Ioana Iamnitchi, Resource Discovery in Large Resource-Sharing Environments, Ph.D. Dissertation, The University of Chicago, December 2003.

- Michal Karczmarek, Constrained and Phased Scheduling of Synchronous Data Flow Graphs for StreamIt Language, Thesis of Master of Science in CS, Massachusetts Institute of Technology(MIT), USA, December 2002.

- Abhishek S. Rana, A globally-distributed grid monitoring system to facilitate HPC at D0/SAM-Grid (Design, development, implementation and deployment of a prototype), Thesis of Master in Computing Science, The University of Texas, Arlington, Nov. 2002.

- Akiko Nakaniwa, Optimal Design of System Resource Management for Distributed Networks. Ph. D Dissertation, Department of Electronics Engineering, Faculty of Engineering, Kansai University, March 2002.

- Rajkumar Buyya, Economic-based Distributed Resource Management and Scheduling for Grid Computing, Ph.D. Thesis, Monash University, Melbourne, Australia, April 12, 2002.

- Carlos A. Varela, Worldwide Computing with Universal Actors: Linguistic Abstractions for Naming, Migration, and Coordination, Ph.D. Thesis, University of Illinois at Urbana-Champaign, 2001.

- Heinz Stockinger, Database Replication in World-Wide Distributed Data Grids, Institute of Computer Science and Business Informatics, University of Vienna, Austria, November 2001.

- Heinz Stockinger, Multi-Dimensional Bitmap Indices for Optimising Data Access within Object Oriented Databases at CERN, University of Vienna, Austria, November 2001.

- Luis F.G. Sarmenta, Volunteer Computing, Ph.D. Thesis, Massachusetts Institute of Technology(MIT), USA, June 2001.

- Jonathan Bredin, Market-based Control of Mobile Agents, Ph.D. Thesis, Dartmouth College, Hanover, NH, USA, June 2001.

- Andrea Carol Arpaci-Dusseau, Implicit Coscheduling: Coordinated Scheduling with Implicit Information in Distributed Systems, PhD thesis, University of California at Berkeley, December 1998.

- Daniel M. Zimmerman, A Preliminary Investigation into Dynamic Distributed Workflow,M.S. Thesis, California Institute of Technology, May 1998.

Sunday, May 11, 2008

GROMACS Flow Chart

| VERSION 3.3 |

This is a flow chart of a typical GROMACS MD run of a protein in a box of water. A more detailed example is available in the Getting Started section. Several steps of energy minimization may be necessary, these consist of cycles: grompp -> mdrun.

| eiwit.pdb |  | ||||||||

| Generate a GROMACS topology | pdb2gmx |  | |||||||

|  | ||||||||

| conf.gro | topol.top | ||||||||

|      | ||||||||

| Enlarge the box | editconf |  | |||||||

| |||||||||

| conf.gro | |||||||||

| |||||||||

| Solvate protein | genbox |  | |||||||

|  | ||||||||

| conf.gro | topol.top | ||||||||

| grompp.mdp |  |  |  | ||||||

| Generate mdrun input file | grompp |  | |||||||

|  | Continuation | |||||||

| topol.tpr |  | tpbconv |  | traj.trr | |||||

|   | ||||||||

| Run the simulation (EM or MD) | mdrun |  |   | ||||||

|  | ||||||||

| traj.xtc | ener.edr | ||||||||

|  | ||||||||

| Analysis | g_... ngmx | g_energy |  | ||||||

Computational Biomolecular Dynamics Group

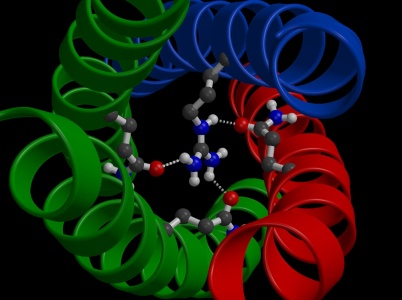

We carry out computer simulations of biological macromolecules to study the relationship between dynamics and function.

Using molecular dynamics simulations and other computational tools we predict the dynamics and flexibility of proteins, membranes, carbohydrates and polynucleotides to study biological function and dysfunction at the atomic level.

|

Randomly picked image from current research. Reload this page to updat

GROMACS

he 5 latest News

| ||||||||||||||||||||||||

| ||||||||||||||||||||||||

System Biology

| ||||||||||||||

The Human Proteome Folding Project will use the computer power of millions of computers to predict the shape of Human proteins for which researchers currently know little. From this shape scientists hope to learn about the function of these proteins, as the shape of proteins is inherently related to how they function in our bodies. This database of protein structures and putative functions will let scientists take the next steps understanding how diseases that involve these proteins work and ultimately how to cure them.

The Human Proteome Folding Project will use the computer power of millions of computers to predict the shape of Human proteins for which researchers currently know little. From this shape scientists hope to learn about the function of these proteins, as the shape of proteins is inherently related to how they function in our bodies. This database of protein structures and putative functions will let scientists take the next steps understanding how diseases that involve these proteins work and ultimately how to cure them.

Hydrophobic (oily): orange

Hydrophobic (oily): orange