HUMAN PROTEOME FOLDING PROJECT

Overview  The Human Proteome Folding Project will use the computer power of millions of computers to predict the shape of Human proteins for which researchers currently know little. From this shape scientists hope to learn about the function of these proteins, as the shape of proteins is inherently related to how they function in our bodies. This database of protein structures and putative functions will let scientists take the next steps understanding how diseases that involve these proteins work and ultimately how to cure them. The Human Proteome Folding Project will use the computer power of millions of computers to predict the shape of Human proteins for which researchers currently know little. From this shape scientists hope to learn about the function of these proteins, as the shape of proteins is inherently related to how they function in our bodies. This database of protein structures and putative functions will let scientists take the next steps understanding how diseases that involve these proteins work and ultimately how to cure them.

Proteins could be said to be the most important molecules in living beings. Just about everything in your body involves or is made out of proteins. Proteins are actually long chains made up of smaller molecules called amino acids. There are 20 different amino acids that make up all proteins. One can think of the amino acids as being beads of 20 different colors. Sometimes, hundreds of them make up one protein. Proteins typically don't stay as long chains however. As soon as the chain of amino acids is built, the chain folds and tangles up into a more compact mass, ending up in a particular shape. This process is called protein folding. Protein folding occurs because the various amino acids like to stick to each other following certain rules. You can think of the amino-acid (beads on a string) as being sticky, but sticky in such a way that only certain colors can stick to certain other colors. The amino acid chains built in the body must fold up in a particular way to make useful proteins. The cell has mechanisms to help the proteins fold properly and mechanism to get rid of improperly folded proteins. Each gene tells the order of the amino acids for one protein. The gene itself is a section of long chain called DNA. In recent years scientists have sequenced the human genome; finding over 30,000 genes within the human genome. The collection of all human genes is known as "the human genome". Depending on how genes are counted, there are over 30,000 genes in the human genome. Each of these genes tells how to build the chain of amino acids for the each of the 30,000 proteins. The collection of all of the human proteins is known as "the human proteome." What the genes don't tell is exactly how the proteins will fold into their compact final form. The final shape of a protein is very important because that determines what it can do and what other proteins it can connect to or interact with. You can think of the protein shapes like puzzle pieces. For example muscle proteins connect to each other to form a muscle fiber. They stick together that way because of their shape, and certain other factors relating to the shape. Everything that happens in cells, and in the body, is very specifically controlled by protein shapes. For example, the proteins of a virus or a bacteria may have a particular shapes that interact with human proteins or human cell membrane, and let it infect the cell. This is obviously an oversimplified description, but it is important to understand how important the shapes of proteins are. Knowing these shapes lets us understand how the proteins perform their desired function and also how diseases prevent proteins from doing the correct things to maintain a healthy cell and body. When your grid agent is running it is trying to fold a single protein from the set of human proteins with no known shape. The client will try millions of shapes and return to the central server the best 500 shapes it can find. The goodness of each shape the grid agent tries is determined by something referred to as the Rosetta score. The Rosetta score examines the packing of amino acids in the protein and produces a number, the lower the number the better. The program that the grid agent is running is called Rosetta. As the computers try to fold the protein chains in different ways, they attempt to find the particular folding/shape that is closest to how the proteins really fold in our bodies. You can see the pictures of the partially folded proteins in the right half of the grid agent screen. The left side shows various scores which tells how properly folded the protein is so far, per all of the rules. If a trial fold gets a worse score, then the computer tries to refold it a different way which may be produce a better score. This is done millions of times for each protein; scientists will look at only the lowest scoring structures. Back to top

Graphical Overview of Project - The project starts with human proteins from the human genome. These protein sequences are the result of a large amount of research in and of themselves (the human genome project was a huge research project carried out at numerous institutions, including the ISB). A great deal of interesting research is still ongoing to find all of the proteins in the Human Genome with mixtures of computational and experimental efforts. We will fold the proteins in the Human proteome that have no known structure (and often no known function).

- Rosetta structure prediction is the program that we’ll use to predict the structures (fold) of these mystery proteins. Rosetta uses a scoring function to rip through huge numbers of possible structures for a given sequence and choose the best structures (which it reports to us as predicted structures). Because there are a large number of possible conformations per sequence and a large number of sequences we need astronomically large amounts of processor power to fold the Human proteome.

- We’ll use the spare computing power from huge numbers of volunteers to run Rosetta on more than fifty thousand protein sequences.

- We’ll get one or more fold predictions for each protein. Not all predictions work, so we’ll also have several numbers attached to each prediction that tell us how much we can trust each prediction.

- We will cross-match the predicted structures with the large data-base of known (by X-ray crystallography and NMR-spectroscopy) structures to see if our predicted fold has been seen before.

- If we find a match, that’s just the beginning. In biology context is very important, biologists use a large diversity of experiments and analysis techniques to carry out their work. There are several methods to try to get at the function of unknown proteins, and structure is just one of them … biologists can best use these fold predictions (from 4) and fold-matches (from 5) when they are integrated with results from other relevant methods (when is a gene turned on or off, what tissue is a protein expressed in, can I find this gene in other organisms?, where is this protein in a metabolic pathway?, etc.).

Click to view larger image as a pdf

Back to top

Central Dogma - Genomic sequence is the final result produced by genome sequencing projects. For the human genome there would be one word (as shown in 1) for each chromosome. The total length of the 23 chromosomes in human is ~3billion letters or bases. This represents a relatively stable place for a cell to store information.

- Genomic DNA is copied to complementary messenger RNA by RNA-polymerase. RNA is less stable than DNA and thus the cell can turn on and off genes by regulating how quickly genes are transcribed into RNA message.

- RNA is translated into protein sequence by the Ribosome. Each three-letter chunk of the RNA sequence (codon) is translated into one of 20 amino acids. Thus each mRNA codes for a single unique protein. The protein is made as a long, unfolded, polypeptide that is not functional until folded.

- Protein folding consists primarily of rotations around the chemical bonds in the backbone and side-chains of the polypeptide to make a conformation that allows the side-chains to pack in a compact core. Here the nascent/unfolded polypeptide/protein is schematized as a red zig-zag. The protein then folds spontaneously to a folded protein as shown at the very bottom. See the description of the scoring functions used by Rosetta for more information about why a protein would do any of this, but the short answer is that the sidechains sticking off the backbone at the bottom make favorable contacts (“+” touching “-“ and oily touching oily for instance).

Click to view larger image as a pdf

Back to top

What is a protein?

Proteins are far from being just things we eat. They are the molecular machines that carry out metabolism, they carry messages that direct development and enable the immune system to tell friend from foe, they repair damage to our DNA after we’ve spent too much time in the sun. In short proteins are at the center of Human biology, all biology.

But what IS a protein? Most genes code for proteins. Proteins are polymers that are built from smaller monomers called amino acids (lets say 150 at a time, but the length of proteins vary from gene to gene). These strings of amino acids (with different amino acids having different shapes and chemical properties) then fold up to make more compact shapes that have specific function. So nature can use the same 20 amino acids, that have a common backbone but different variable groups, to make an astronomically large variety of shapes and functions using the same 20 amino acids, the same ribosome (the machine that strings the amino acids together). By changing the order and type of amino acids in proteins, living things can come up with new functions and shapes. This process is often called mutation. Mutations to proteins can be changes of one amino acid in a protein, say the hemoglobin in your blood) for another or the deletion of several amino acids from a protein http://web.mit.edu/esgbio/www/dogma/mutants.html. Many research efforts are currently underway to allow us to rationally engineer protein sequences to make new functions and therapies. Most drugs carry out their functions by binding to the specific shapes that folded proteins make in cells. Understanding protein three-dimensional structure is one of many things we need to understand if we are to decode the Human genome or the genome of a given pathogen. For more info on the central dogma of modern biology see:

http://en.wikipedia.org/wiki/Central_dogma

http://web.mit.edu/esgbio/www/dogma/dogmadir.html

http://www.emc.maricopa.edu

To see the 20 amino acids see:

http://web.mit.edu/esgbio/www/lm/proteins/aa/aminoacids.html

http://web.mit.edu/esgbio/www/lm/lmdir.html

Which proteins are important?

When faced with the question, which proteins should we fold, the following criterion were used: choose proteins that are important to the people that will be donating the computing cycles that will be folded. Overall predicting the structure of every protein in an organism with Rosetta will contribute to our overall understanding of several proteins in that genome and how those predicted proteins interact with the organism as a system. Can you imagine trying to fix a car or a machine knowing the function of only 60% of the components. That is the situation that biomedical and biological researchers, to their credit, operate in. Thus, anything that can shed light on these mystery proteins is of use to the field of biology and medicine. These predictions will not be a magic bullet but provide a resource for biologists that are working on the genomes we fold. The first category of proteins to fold are the proteins in the Human Genome with no known structural homologs. Human proteins are the targets of drugs and the key to improving human health. Improving our understanding of these proteins has innumerable positive effects. Some Human proteins in the blood are therapeutics in and of themselves. The second category consists of proteins found in the genomes of pathogens. Understanding the biology of these bacteria and viruses that have cause disease will alow us to better fight them. Many of these proteins are the targets of drugs or have roles in virulence that have yet to be fully understood. The last category consists of proteins that are found in the genomes of environmental microbes. These microbes represent the majority of molecular biodiversity on the planet and understanding these microbes and their role in our environment will be aided by a deeper understanding of their proteomes (the structure and function of the proteins in their genomes). These microbes are responsible for global carbon and nitrogen cycles, they degrade human waste products, and can perform countless undiscovered enzymatic biosynthesis. Back to top

Drawing Protiens Proteins are large complicated molecules, so simplifying how we represent them visually is key to protein structure research. - The chemical structure of a single amino acid. These are strung together by the ribosome in the order encoded on the mRNA that codes for the protein. There are 20 amino acids to choose from, R (see depiction below) can thus be any of 20 different chemical structures depending on what amino acid is specified at that position by the mRNA.

- A simpler way to write an amino acid.

- Three different amino acids forming the beginning of a protein.

- The backbone stays the same (thus, the Ribosome can use the same machinery to add each new amino acid) the sidechains are variable (a huge diversity of chemical functions and structures results from varying the order and composition of amino acids in proteins, nature can solve most of its problems with proteins).

Click to view larger image as a pdf

Back to top

Rosetta Rosetta is a computer program for de novo protein structure prediction, where de novo implies modeling in the absence of detectable sequence similarity to a previously determined three-dimensional protein structure. Rosetta uses small sequence similarities from the Protein Data Bank [http://www.rcsb.org/pdb/] to estimate possible conformations for local sequence segments (three and nine residue segments). These segments are called fragments of local structure. It then assembles these pre-computed structure fragments by minimizing a global scoring function that favors hydrophobic burial and packing, strand pairing, compactness and energetically favorable residue pairings. Results from the fourth and fifth critical assessment of structure prediction (CASP4, CASP5) [http://predictioncenter.llnl.gov/] have shown that Rosetta is currently one of the best methods for de novo protein structure prediction and distant fold recognition. Using Rosetta generated structure predictions we were previously able to recapitulate or predict many functional insights not detectable from primary sequence. Rosetta was also recently used to generate both fold and function predictions for Pfam protein families that had no link to a known structure, resulting in many high confidence fold predictions. In spite of these successes, Rosetta has a significant error rate, as do all methods for distant fold recognition and de novo structure prediction. We thus calculate not just the structure but also the probability that the predicted structure is correct using the Rosetta confidence function. The Rosetta confidence function partially mitigates this error rate by assessing the accuracy of predicted folds. Another unavoidable source of uncertainty, with respect to function prediction from structure, is the error associated with distilling function from fold matches. Sometime fold carry out more than one function. The predictions generated by de novo structure prediction are thus best used in combination with other sources of putative or general functional information such as proximity in protein association or gene regulatory networks. Thus, making the predictions resulting from this project available to the public in a easily accessible way is a critical final step in this project. For a quicktime movie showing a protein (Ubiquitin) being folded by Rosetta click here [1d3z.mov OR 1d3z.mpg ] Back to top

Rosetta Score Rosetta uses a scoring function to judge different conformations (shapes/packings of amino acids within the protein). The simulation consists of making moves (changing the bond angles of a bunch of amino acids) and then scoring the new conformation. The rosetta score is a weighted sum of component scores, where each component score is judging a different thing. The environment score is judging how well the hydrophobic (oily) residues are packing together to form a core, while the pair-score is judging how compatible touching residues are with each other one pair at a time. Environment score: The formation of a hydrophobic core, or the hydrophobic effect, is for most proteins the central driving force for protein folding. The Rosetta environment score rewards burial of hydrophobic residues in a compact hydrophobic core and penalizes solvation of these oily groups. I’ve represented hydrophobic residues as orange stars. The left conformation is good (all the hydrophobics together) while the rightmost conformation is bad (with the hydrophobic amino acids not touching).

Pair-score: Two conformations of a polypeptide are shown, one (top) where the chain is folded back on itself bringing two cysteins together (yellow + yellow = possible disulphide bond) and forming a salt-bridge (blue+red = opposites attract). The conformation at bottom does not make these pairings and the pair-score would, thus, favor the top conformation.

Click to view larger image as a pdf

Back to top

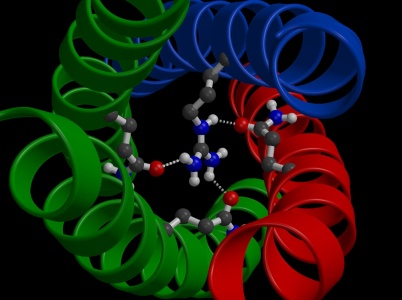

The Amino Acids When we display proteins we often use different coloring schemes to help us see the interactions taking place between the different amino acids. We have used the following color scheme for the Human Proteome folding project:  Hydrophobic (oily): orange Hydrophobic (oily): orange

Acidic (negatively charged): red

Basic (positive charge): blue

Histidine (positive or negative): purple

Sulphur containing residues: yellow

Everything else (even though every amino acid is special): green

Click to view larger image as a pdf

Back to top

More information for Scientists Read more in our recent Journal Articles:

Application to halobaterium NRC-1: [http://genomebiology.com/]

Application to Initial annotation of Haloarcula marismortui: [http://www.genome.org/]

Application to the annotation of Pfam domains of unknown function: [http://www.sciencedirect.com/]

The earliest papers on Roseta De Novo structure prediction (including works by Kim Simons, Rich Bonneau, Charlie EM Strauss, Chris Bystroff, Ingo Ruczinski, Carol Rohl, Phil Bradley, Lars Malmstrom, Dylan Chivian, David Kim, Jens Meiler, Jens Meiler, Jack Schonbrun, David Baker, and others) can be found at: http://bakerlab.org

Review of De Novo structure prediction methods: annual-rev-bonneau.pdf

[http://arjournals.annualreviews.org]

One-at-a-time Rosetta server (the Robetta server); Hosted at ISB and Los Alamos National Labs (Charlie EM Strauss) [http://robetta.bakerlab.org/] Papers describing Robetta: [http://www3.interscience.wiley.com]

[http://www.ncbi.nlm.nih.gov]

Back to top

PEOPLE Read more about the scientists at the ISB and the University of Washington leading the Human Proteome Folding Project. For more information on this project direct scientific inquiries to either Richard Bonneau or proteomefolding@systemsbiology.org.

ISB:

Dr. Richard Bonneau: rbonneau@systemsbiology.org

Dr. Bonneau is the technical lead on the Human Proteome Folding project. Dr. Bonneau has expertise primarily in ab initio protein structure prediction, protein folding, and regulatory network inference. He is currently focused on applying structure prediction and structural information to functional annotation and the modeling/prediction of regulatory and physical networks. Dr. Bonneau working to develop general methods to solve protein structures and protein complexes with small sets of distance constraints derived from chemical cross-linking. At the ISB Dr. Bonneau also works on a number of systems biology data-integration and analysis algorithms, including algorithms designed to infer global regulatory networks from systems-biology data.

Dr. Leroy Hood

Dr. Leroy Hood is recognized as one of the world's leading scientists in molecular biotechnology and genomics. A passionate and dedicated researcher, he holds numerous patents and awards for his scientific breakthroughs and prides himself on his life-long commitment to making science accessible and understandable to the general public, especially children. One of this foremost goals is bringing hands-on, inquiry-based science to K-12 classrooms.

[more: http://www.systemsbiology.org ]

University of Washington:

Lars Malmstroem: larsm@u.washington.edu

Lars Malmstroem has worked to engineer the infrastructure (at the ISB/UW end) needed to handle the vast highly interconnected data-sets that this project will generate; he will also be heavily involved in developing the correct data-integration schemes to best deliver the resultant predictions to biologists.

Dr. David Baker:

Rosetta was developed initially in the laboratory of David Baker by a team that included a large number of scientists at several institutions. The goal of current research in his laboratory is to develop improved models of intra and intermolecular interactions and to apply improved models to the prediction and design of macromolecular structures and interactions. Prediction and design applications can be of great biological interest in their own right, and also provide very stringent and objective tests which drive the improvement of the model and increases in fundamental understanding.

[more: http://depts.washington.edu/ ] Back to top |

The Human Proteome Folding Project will use the computer power of millions of computers to predict the shape of Human proteins for which researchers currently know little. From this shape scientists hope to learn about the function of these proteins, as the shape of proteins is inherently related to how they function in our bodies. This database of protein structures and putative functions will let scientists take the next steps understanding how diseases that involve these proteins work and ultimately how to cure them.

The Human Proteome Folding Project will use the computer power of millions of computers to predict the shape of Human proteins for which researchers currently know little. From this shape scientists hope to learn about the function of these proteins, as the shape of proteins is inherently related to how they function in our bodies. This database of protein structures and putative functions will let scientists take the next steps understanding how diseases that involve these proteins work and ultimately how to cure them.

Hydrophobic (oily): orange

Hydrophobic (oily): orange